Hands-On: Sidecar | Topz (Designing Distributed Systems)

Sidecar Hands-on Exercise: TOPZ

The purpose of this exercise is to explore the "sidecar" pattern of deploying software using containers and container groups.

This exercise has the reader creating an arbitrary containerised application and a sidecar in the same namespace. The sidecar provides resource monitoring for the virtual host.

The provided instructions use a purpose-built utility called topz,

and leaves the main application ambiguous (to be determined by the

implementer). The implementation is started using Docker CLI commands.

Below is the exercise as defined in the book's text:

Local Setup

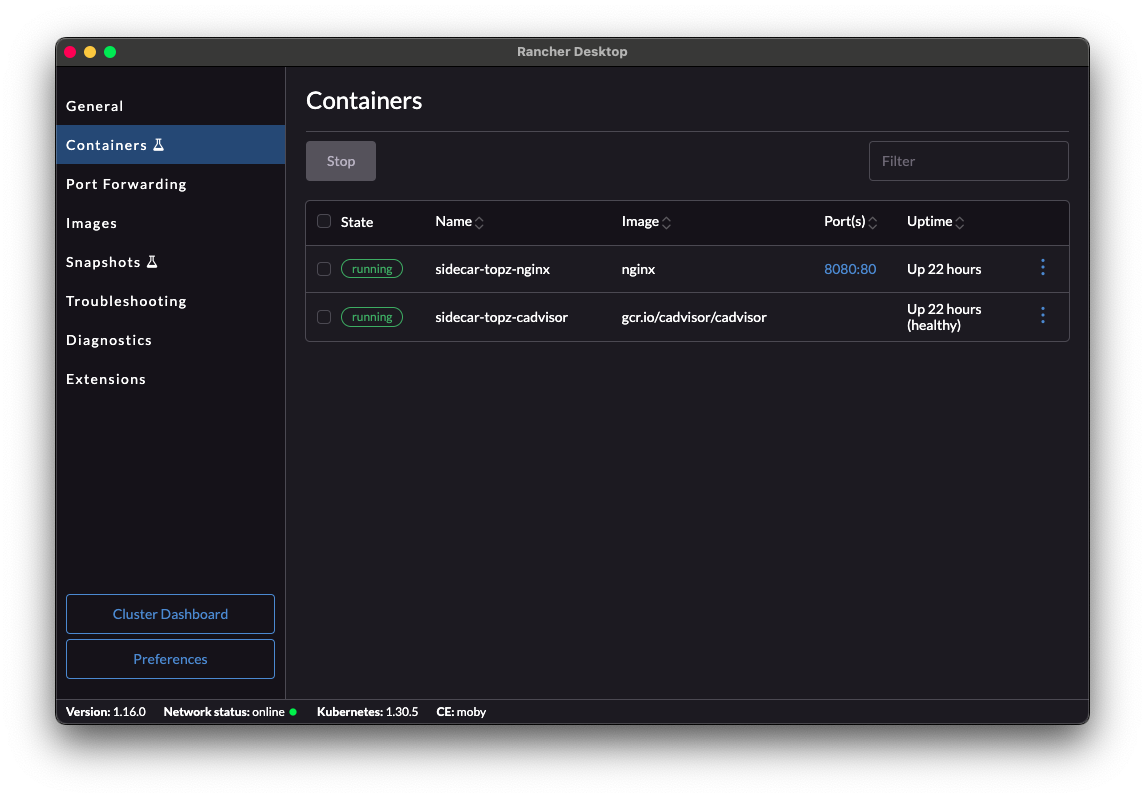

I'm using the default installation of Rancher Desktop (v1.16.0) on Apple M1 Silicon, along with the provided dockerd container engine

Challenges

-

The author doesn't provide the main application for the exercise

In the absence of having a specific application to run, on the advice of my code-assistant AI, I've opted to use a standard NGINX container as my base application. This gives me some default behavior for the "main application" that can be observed to verify the setup

-

The provided image expects a

Linux/AMD64architecture. My code assistant AI has suggested two alternatives:- use an alternate sidecar application (cAdvisor)

- Build a Multi-Archtecture Image of Topz

Since this is all practice, I will try both approaches

Solutions

- Solution 01

- Solution 02

Overview

This solution applies Nova's suggestion of replacing the topz sidecar (not compatible with either Mac OS or with the Mac Silicon hardware) with an alternate sidecar. It uses NGINX as the "main application", assuming some static content is being served, and cAdvisor as the sidecar.

Solution Artifacts

The solution is implemented as

- an nginx.conf file that contains the service configuration

- a docker-compose.yaml file that contains the docker network and container information

Solution Design

Running the solution

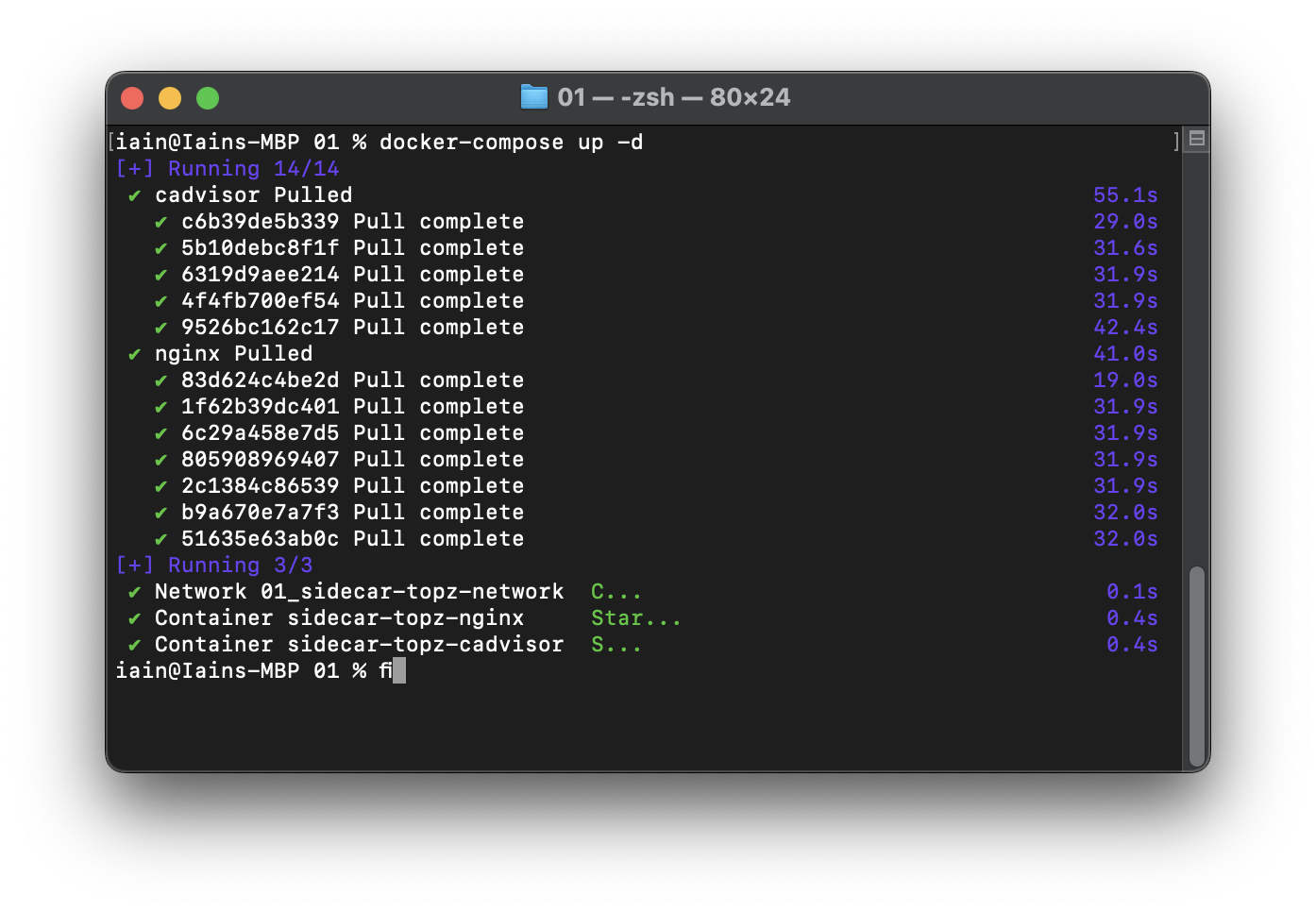

In the directory that contains the solution files: Run the following command to start the solution implementation (will run on port :8080)

docker-compose up -d

Screen captures

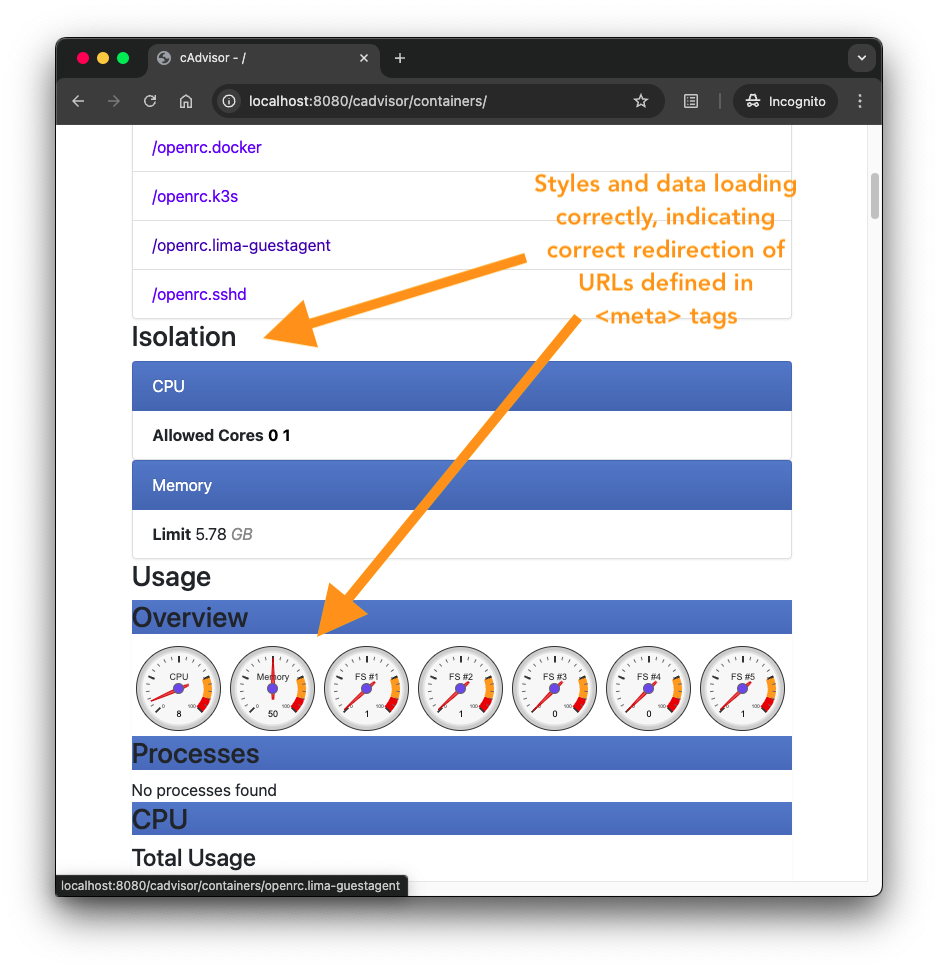

Observe the solution

With the Docker stack running:

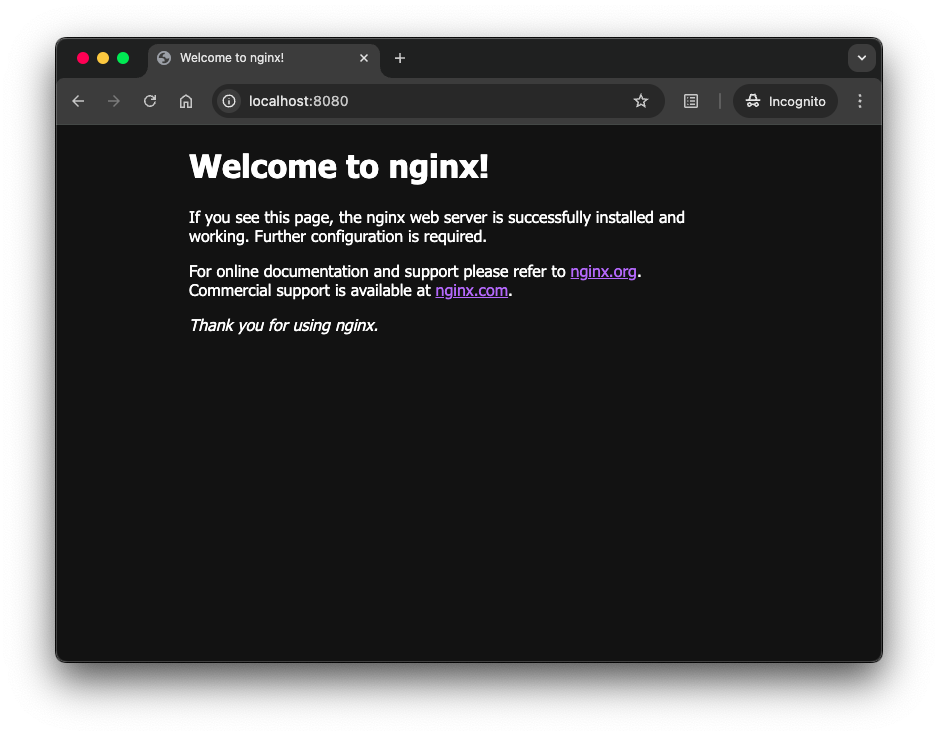

- point your browser to

localhost:8080to see the default NGINX welcome page... this is a stand-in for the "main application" - point your browser to

localhost:8080/cadvisororlocalhost:8080/cadvisor/containersto see the UI of the cadvisor sidecar - Navigate the cadvisor page to be sure the links work, and external resources are loading (you should see graphs, gauges, etc. with data updating in real time)

If running in Mac OS, you will see regularly recurring 500 errors, if you check the Network tabs. This is because cAdvisor is trying to invoke a command that runs the ps command-line utility to retrieve some additional system data. This utility is not available on Mac OS. But the absence of this data doesn't otherwise affect cAdvisor, and it remains a useful and valuable tool withoutt these calls.

Screen captures

_Note: navigating to /cadvisor (which has no actual content) redirects to /cadvisor/containers, which is the root of the cAdvisor content.

Stopping the solution

Navigate to this folder in your clone of this repository Run the following command:

docker-compose down

Screen capture

Solution 01 Challenges

Path and Port Rewriting:

- Managed cAdvisor’s links and assets that initially lacked correct paths and port information, leading to broken links and missing resources.

Handling Trailing Slashes and Redirects:

- Configured Nginx to append trailing slashes on specific routes (top-level routes) that otherwise report a 404. Note that for routes below the top level (e.g.,

/containers/docker), including the trailing slash is an error - Addressed issues where URLs were incorrectly rewritten, causing multiple redirects or incorrect paths.

Caching and Profile-Specific Issues:

- Resolved persistent caching issues where browser profiles retained outdated URL mappings, leading to debugging obstacles.

Optimizing Resource Buffering:

- Increased Nginx buffer sizes and disabled disk buffering to handle the larger upstream data from cAdvisor, which required handling more data-intensive API responses. This was largely a developer-experience issue, as I like to view log output in [

lnav](https://lnav.org/ "The LogFile Navigator | command-line log viewer) and the warnings announcing exceeding buffer sizes were disrupting the lnav display output.

Comments

This turned out to be as much or more an exercise in learning NGINX as a lesson in the sidecar pattern, but I seem to have gotten there in the end.

I've gone through several iterations, with only the final iteration captured here. The first iteration exposed all of the cAdvisor paths as top-level paths, just below the root, (e.g., /containers, /docker, etc.). It occurred to me to apply the sidecar as a general pattern, it was probably wiser to use a less-ambiguous path (some hypthetical 'main application' might conceivably require a containers path at the root), and to only occupy one namespace for the cadvisor paths, so the second solution takes that approach, using sub_filter directives to rewrite URLs in the HTML returned from the downstream cAdvisor service, so that all urls meant to redirect to the sidecar have paths prefixed with /cadvisor.

A good deal of this work was also teasing out which suggestions of the AI were valuable and which were not. For example, when I had some links that were not working correctly, the AI suggested rewriting all relative paths, which ended up having disastrous consequences for loading non-html resources like stylesheets and scripts, and proved unnecessary anyway. Another dubious AI suggestion was using $host:$server_port to force re-written URLs to have the correct port address. It turns out the correct solution was to use the $http_host variable, but the AI continued to suggest the other usage.

Taking a step back to more general observations, I find that the AI (in this case ChatGPT 4o) does pretty well with general purpose languages like JavaScript, but falters a bit with purpose-built languages like the configuration language in NGINX or other DSLs. Not sure if that observation will hold up to scrutiny over time. But I will keep it in mind.

TEMPORARY CONTENT 02 Test 02